Time for a little more on the APT.

Well first, lets call a spade a spade.. it's the state sponsored recon, intrusion and theft of key industrial, financial, and military assets. There, now that we're no longer jumping at shadows because of this "new threat" we can discuss a method of detecting the APT. We've established that these adversaries are intelligent, efficient and worthy enemies but here's the thing, they are an anomaly. In a well managed environment, even one that contains tens of thousands of hosts they stick out. Even in a moderately well managed environment, they are still an anomaly. So, you're asking..how does one detect them?

1) Use Anomaly Detection.

Suppose for a moment that you're monitoring at the perimeter, or even the core of your network. You don't typically allow Remote Desktop Connections from the outside world but your organization has made allowances in particular places. Ok, great so now you've got an attack vector. How are you monitoring it?

How about the following :

Administrative (or any other) RDP Sessions from China

New Services being installed

Network behavioral changes

How would you determine this you might be asking? Well let's evaluate how we can detect them.

1) The endpoint itself

Perform simple checks against services listings. For instance, services that don't belong;

Like one called MCupdate (Mcafee Update) on a system running Symantec Antivirus.

Or services calling a DLL that is named incorrectly in the 'Path to executable' field.

Look for Executables in places where they don't belong such as:

C:\Windows\System32\Config

or having hashes similar to the following:

768:xRhzolZP75giNs7WPaLr1JWa304IvwghoPTrH2oI:zhzol8iWWPkrDWa3vCw9TCo

384:MUYJfQuuOZ2XYiUj/S0AL6hImJbiGwSeulswOezXzFdlIWO+RbBzqTqoMefZx966:EoVOZ2c/1S6xwS/dzDFpRbErx9b

6144:U1cKrvLpMm6Yo9VtJNcUTqoFDf4OUOsrhnte38uyLdQn528Igf0qSI8N5yhFa0y4:KbvujF97J+atUvr3pLdQn52XgMNAFa0p

Did someone say fuzzy hashing was cool? Yeah it's very cool..thanks Jesse.

2) The network, through an IDS or NBAD looking for the following type of traffic:

Administrative RDP connections:

alert tcp $EXTERNAL_NET any -> $HOME_NET 3389 (msg:"POLICY RDP attempted Administrator connection request"; flow:to_server,established; content:"|E0|"; depth:1; offset:5; content:"mstshash"; distance:0; nocase;

pcre:"/mstshash\s*\x3d\s*Administr/smi"; reference:bugtraq,14259;

reference:cve,2005-1218; reference:url,www.microsoft.com/technet/security/bulletin/MS05-041.mspx; classtype:misc-activity; sid:4060; rev:3;)

3) Anomalous traffic:

Traffic that doesn't belong on certain ports:

For instance non HTTPS traffic on port 443 or Non DNS traffic on port 53.

Example: the string [SERVER] doesn't belong anywhere on port 443.

3) Behavioral changes in the system.

If the system never listens on port 443, and all of a sudden it begins communicating with China on port 443, that's an anomaly.

If the system never visits defense contractors or manufacturers, and all of a sudden it begins doing so, that's an anomaly.

If a webserver typically receives 10,000 visits per day during business hours and all of a sudden it's receiving 30,000 and there was no product release or new project etc.. that's an anomaly.

You can then add time, rates, and frequencies in to the algorithm to tune the detection.

Some other food for thought. Don't rely upon your Antivirus products to protect you. Their code is changed regularly and will not be detected.

And finally, these guys are good. Very good. But, they are human and while they cover their tracks well and hide well, they are fallible. They are creatures of habit, they can be profiled, they do things to blend in to the best of their ability by using built-in tools along with their own but they can be found. They are an anomaly.

Monday, November 16, 2009

Tuesday, November 10, 2009

SPILLED COFFEE...who cares?

So COFFEE got leaked..is anyone surprised?

I liken this story to the fact that radar detectors exist to evade speed traps. The truth of the matter is, when you speed you're bound to get caught regardless of your knowledge of radar or laser guns and regardless of the fact that your detector is beeping. Typically by the time your detector is beeping loudly enough for you to pay attention you're already painted and are in the process of being pulled over. Many people are so convinced that COFFEE is this panacea of LE forensics capabilities that the leaking of it will spell doom and disaster for Law Enforcement everywhere. Boy will they be surprised when they learn what it's made of.

"But they'll detect it and subvert it"

Maybe they will, maybe they won't. Does it really make a difference? This is part of the game. The tool was widely released, why is this leak a shock? If the computer is the only source of evidence in a case, then you don't have that strong of a case to begin with. Even so, police raids and seizures are not exactly broadcast to the suspect. COFFEE is a meta-tool anyways, or a tool made up of tools, just like every other live toolkit. COFFEE is not magic. It's a script.

"But now that they know what it does they can prevent it from being useful"

Funny, the same was said of just about every forensics tool out there. The good guys have a toolset, just as the bad guys do. Who can use their tools more effectively?

"But but but...the sky is falling!"

No Chicken little, the sky is not falling...it's just another acorn.

I liken this story to the fact that radar detectors exist to evade speed traps. The truth of the matter is, when you speed you're bound to get caught regardless of your knowledge of radar or laser guns and regardless of the fact that your detector is beeping. Typically by the time your detector is beeping loudly enough for you to pay attention you're already painted and are in the process of being pulled over. Many people are so convinced that COFFEE is this panacea of LE forensics capabilities that the leaking of it will spell doom and disaster for Law Enforcement everywhere. Boy will they be surprised when they learn what it's made of.

"But they'll detect it and subvert it"

Maybe they will, maybe they won't. Does it really make a difference? This is part of the game. The tool was widely released, why is this leak a shock? If the computer is the only source of evidence in a case, then you don't have that strong of a case to begin with. Even so, police raids and seizures are not exactly broadcast to the suspect. COFFEE is a meta-tool anyways, or a tool made up of tools, just like every other live toolkit. COFFEE is not magic. It's a script.

"But now that they know what it does they can prevent it from being useful"

Funny, the same was said of just about every forensics tool out there. The good guys have a toolset, just as the bad guys do. Who can use their tools more effectively?

"But but but...the sky is falling!"

No Chicken little, the sky is not falling...it's just another acorn.

Why limited privileges don't matter

One day, financial administrative officer Jane Q. received an email from the bank. It read "Dear valued customer, we need to validate your account due to a system upgrade. Please click the following link[..]" Jane, not wanting to lose access to the account clicked the link..and got infected with ZeuS. Unknown to Jane, her stored IE passwords were immediately offloaded. Later that day when she went to do her daily "close of business" process there were some additional fields on the affiliate banking website her company partnered with. "Hmm must be that upgrade they did" She thought to herself. She happily entered the requested information. The next day, Jane opened up the same site but there was a problem. The account was missing $400,000! It was discovered that Jane's credentials were compromised and the account was drained and the money went to 3 dozen accounts all over the world.

How could this have happened? Jane only had user level privileges.

For years, the common thought has been follow the Principle of Least Privilege. Which is to say, don't give people more rights than they need to do their job, or in a windows centric world, no administrative access.

What if the job requires access to the company finances, and the position is authorized to transfer funds? limiting the privilege of the user on the operating system is of no consequence. When sensitive data is accessed by authorized users, it becomes exposed to processes designed to steal it running with the privileges of the authorized user. Simple concept right? This concept has been overlooked for years because it didn't matter. For years, restricted rights meant no compromise of consequence. Those days are gone.

It used to be that malware wouldn't run unless it was originally executed with administrative or higher level privileges. if executed with limited privileges, it would execute, and run until the computer rebooted but it could not establish a persistence mechanism, and did not have access to key parts of the operating system.

Modern malware as many are aware no longer requires administrative privileges to execute, communicate and establish persistence. The "bad guys" figured out that we, the "good guys" started restricting admin rights. Big shocker right? They figured out how to use windows variables and stopped hard coding %systemdir%. They figured out that those rights weren't required to achieve their objective. Accounts were decoupled from the system and re-coupled with the data those accounts have access to. If your goal is data-theft, then full access to the system isn't required. Access to the account that has access to the data is all you need. I refer back to Marc Weber Tobias..."The key does not unlock the lock, it actuates the mechanism which unlocks the lock".

These days the only benefit to restricting privileges is to limit the scope of the damage caused by a compromise. Limiting privileges does not prevent compromise. It's still a good practice but myth that limiting privileges will prevent compromise has been BUSTED.

How could this have happened? Jane only had user level privileges.

For years, the common thought has been follow the Principle of Least Privilege. Which is to say, don't give people more rights than they need to do their job, or in a windows centric world, no administrative access.

What if the job requires access to the company finances, and the position is authorized to transfer funds? limiting the privilege of the user on the operating system is of no consequence. When sensitive data is accessed by authorized users, it becomes exposed to processes designed to steal it running with the privileges of the authorized user. Simple concept right? This concept has been overlooked for years because it didn't matter. For years, restricted rights meant no compromise of consequence. Those days are gone.

It used to be that malware wouldn't run unless it was originally executed with administrative or higher level privileges. if executed with limited privileges, it would execute, and run until the computer rebooted but it could not establish a persistence mechanism, and did not have access to key parts of the operating system.

Modern malware as many are aware no longer requires administrative privileges to execute, communicate and establish persistence. The "bad guys" figured out that we, the "good guys" started restricting admin rights. Big shocker right? They figured out how to use windows variables and stopped hard coding %systemdir%. They figured out that those rights weren't required to achieve their objective. Accounts were decoupled from the system and re-coupled with the data those accounts have access to. If your goal is data-theft, then full access to the system isn't required. Access to the account that has access to the data is all you need. I refer back to Marc Weber Tobias..."The key does not unlock the lock, it actuates the mechanism which unlocks the lock".

These days the only benefit to restricting privileges is to limit the scope of the damage caused by a compromise. Limiting privileges does not prevent compromise. It's still a good practice but myth that limiting privileges will prevent compromise has been BUSTED.

Monday, August 24, 2009

New tools on the horizon

Been busy again but here's a brief update..

Recently I read about the upcoming release of Accessdata FTK 3.0. Yikes! 3.0 so soon? If you ask me it looks like Accessdata wants to get away from the 2.0 brand name and on to something that may have appeal to most people.

Why am I excited by 3.0? It's really quite simple. 3.0 allows you to have 4 workers for the same price as the one worker that was available in 2.x. Hopefully the processing speed is infintely faster, assuming they did it right. With 2TB drives being available I don't really see another way for the common examiner to keep up, especially when you have to do full indexes, hashing, carving and so on. Here's to hoping that 3.0 lives up to the marketing slicks...and for Accessdata's sake lets hope it does.

What else is coming? The Image Masster Solo-4. Now this device looks appealing to me as it meets my current requirement set for a hardware imaging device. It supports encryption of the image on the fly using ICS drive cypher. It can send the image over the network through a 1 GB interface. It runs a windows xp OS? That has me a little worried (imagine the imaging device getting compromised by a network worm if used in a hostile network environment) but to be honest but I don't know enough about it just yet. The device will be around $2500 according to the rep I spoke to.

HBGary expanded Responder Pro to include some very interesting tools like REcon, and C# scripting capabilities. FastDump Pro also got a bit of a facelift to include Process Probing via the -probe switch. Basically you take a process and force all of its paged out memory back in to physical memory for analysis. More on these developments soon.

Recently I read about the upcoming release of Accessdata FTK 3.0. Yikes! 3.0 so soon? If you ask me it looks like Accessdata wants to get away from the 2.0 brand name and on to something that may have appeal to most people.

Why am I excited by 3.0? It's really quite simple. 3.0 allows you to have 4 workers for the same price as the one worker that was available in 2.x. Hopefully the processing speed is infintely faster, assuming they did it right. With 2TB drives being available I don't really see another way for the common examiner to keep up, especially when you have to do full indexes, hashing, carving and so on. Here's to hoping that 3.0 lives up to the marketing slicks...and for Accessdata's sake lets hope it does.

What else is coming? The Image Masster Solo-4. Now this device looks appealing to me as it meets my current requirement set for a hardware imaging device. It supports encryption of the image on the fly using ICS drive cypher. It can send the image over the network through a 1 GB interface. It runs a windows xp OS? That has me a little worried (imagine the imaging device getting compromised by a network worm if used in a hostile network environment) but to be honest but I don't know enough about it just yet. The device will be around $2500 according to the rep I spoke to.

HBGary expanded Responder Pro to include some very interesting tools like REcon, and C# scripting capabilities. FastDump Pro also got a bit of a facelift to include Process Probing via the -probe switch. Basically you take a process and force all of its paged out memory back in to physical memory for analysis. More on these developments soon.

Friday, July 31, 2009

Reasonable Belief - Depth of Penetration

This is a v.1 figure. Comments, suggestions welcome.

This is a v.1 figure. Comments, suggestions welcome.Back in March I began with a high level overview of reasonable belief as it applies to intrusions and notification. I'd like to take a little time to examine the Depth of Penetration as it applies to reasonable belief to see where I end up.

First some criteria.

Depth of Penetration can be simply defined as: The scope of access to resources gained by an intruder.

Major questions to answer:

What account(s) were compromised?

What level of privilege does the account have?

What systems were accessed during the Window of Risk?

What data is the account authorized to access?

What data are at risk?

We also collect system meta-information. This includes:

Who has administrative rights

Who has access to it

What role the system holds in the organization

Where the system is accessible from

What IP address it uses

Discussion:

The objective in establishing depth of penetration is to determine what the intruder compromised, had access to, and the level of privilege obtained.

When a system or network is penetrated by an attacker, an account is involved, even if the account is an anonymous or guest account. If the account is used by an attacker, it is considered compromised. This account will be authorized to access specific resources within a network or system. The intruder will therefore have credentials to access systems and data.

Can a domain or local system account be compromised, and not have resource accounts compromised? Yes. Let's say my local system gets hacked in to and my domain login is compromised. I also have accounts on an ftp server, a web server, a database server, and email. When my domain account is compromised, it does not mean that the other accounts were compromised. If my domain account is compromised, we need to establish the authentication and authorization methods used on each of the resource systems. If the AAA is integrated with the domain, then the attacker will potentially have cart blanch access to all of the resources and data that I have access to. If AAA is not domain integrated, then An investigation in to each of the resources I have access to is required to determine the veracity of the claim that other accounts/resources and data are at risk. This establishes scope.

Suppose a keylogger were installed on my machine. Does that mean that all of my resource accounts were compromised? Again, that's not necessarily true. We can assume the worst and say that everything I have access to is compromised because there was a keylogger on the system. We can also go the route of - whatever is in the keylog file is what was compromised. Which is correct? In reality, neither is true and neither is wrong. The only way to truly determine the correct path here is to examine the keylogger and it's logging mechanisms. Does it write to a buffer and mail it out? Does it log it to a file? Is the file encrypted? Can you decrypt it? This also puts too much emphasis on the keylogger. An examination of other artifacts is required to validate any conclusions drawn from a keylogger examination.

In a third scenario, let's say a system is compromised and a packet sniffer is installed. The depth of the penetration can be difficult to establish in this scenario because many organizations do not log internal network traffic. We must determine what data travelled to/from the system, or was sniffable by the system.

In a fourth example, consider that I am a user working from a desktop machine. I have no privileges beyond an authenticated and valid user account. I am in other words, a "regular user". I visit a website and contract a malware infection. This malware provides remote control over my system, and does not require administrative privileges. The system is now "botted". The person, assuming there is one, at the other end of the connection now has access to whatever I have access to, and may be able to escalate privileges. In this scenario we need to determine if the attacker escalated privilege, and to what degree. In addition we must examine what actions I took while infected; What intranet sites were visited, what systems did I log in to or access? What data did I work with or access during the compromise window? What data did my account have access to?

These examples are slight digressions from the singular topic of Depth of Penetration, but they are important to establishing the actual depth of the penetration.

How does Depth of Penetration actually inform reasonable belief?

Remember that Reasonable Belief is what a layperson believes given similar circumstances. A decision maker is more likely to believe that data is at risk and/or compromised when there is no hard evidence to confirm or refute the data loss if the intruder gained access to a resource with the authorization to read the data stored therein. In the eyes of the layperson, access often equals acquisition. When an attacker gains elevated privileges on a system containing sensitive data, a layperson will inherently lean towards a reasonable belief that the data was acquired. Conversely, a layperson will be less likely to believe data was acquired if elevated privileges were not obtained, even if the compromised account had direct access to sensitive data. In addition, a layperson tends to think less is at risk when a compromise affects one system than they do of a critical or multiple system compromise. These beliefs are commonly strengthened if the examination lacks depth and does not provide a more plausible explanation.

To be effective, this portion of the examination must be able to show in enough detail the accounts used by the intruder;which systems were compromised or used by the intruder;what level of privilege each account had on each system accessed by the intruder;If the account was able to access and/or acquire the data from each system;What data was present.

Tuesday, July 28, 2009

Don't worry it's just cybercrime

In countries with corrupt politicians (That's all of them isn't it?), corrupt authorities, corrupt businesses, criminals reign supreme. Throw off years of oppressive government and what do you have? You have Ph.D's in engineering, computer science, economics, and yes...rocket science sitting around wondering what to do with themselves. The weight has been lifted and now there's nothing to do with a fantastic education, so they apply their skills where they're needed. They do anything and everything to survive and ultimately thrive. In a country with no authority figures that can't be bribed and businesses looking to establish themselves there are two primary motivating factors; Money and Power, Power and Money. In countries full of people with nothing to lose, these two factors become the keystones of Maslow's heirarchy.

Survival mode; The purpose of survival mode is to "get yours" at whatever the cost. You do what it takes to get a loaf of bread, to secure your family, to protect yourself and those you care about. The now abandoned Ph.D's have a new purpose and it's money. Money and Power, Power and Money...Money=Power. Those without money and power will always be subject to those that have it, especially in transition economies with weak governments. These enterprising individuals have been swept up in to the world of organized crime and they're loving it. What's not to love? The money, the power, the women, the cars, the lifestyle? It's easy to love it when it's going well. That's right..all the hallmarks of modern organized crime exist and it's going well, very well. If they can keep the cash flowing, they can continue to pay off authorities and the businesses are clamoring all over each other for their piece of the pie and they're willing to do whatever it takes as well.

Organized crime has existed for centuries and it's just recently branched in to the digital realm. Why should anyone be surprised by this? It's a target rich environment, the risks are low, the rewards are high, and internationally there is nothing stopping you. There are whole new rackets, and re-invented rackets that are applied. Intimidation, fake lotteries, scams, protection, extortion, trafficking, controlling and influencing industries (Gas & Oil, construction)...sounds familiar doesn't it? This is nothing new, they've just adapted. Let's say that again...this is nothing new, they've just adapted. Since the dawn of crime, there's been a fight against it. That's right, this fight has been fought before but many pieces had to fall in to place for that fight to truly take place. The following components are missing from this new fight.

Security researchers, security companies, all are saying "Oh my god cybercrime is this terrible thing and it's huge!" We read headlines detailing hundreds of thousands of identities being stolen, of large sums of money being lifted from bank accounts, of thousands of credentials being compromised. Meanwhile the rest of the world just keeps on ticking, moving forward like nothing is happening.

One has to ask..do they care? There are no bombs, no known murders associated with cybercrime gangs(at least I don't know of any..if you do tell me). Cybercrime has been relegated to the realm of "nuisance" crime, right next to harrassment and stalking. Computers are still seen as magic, and cybercrime is seen as smoke, mirrors and illusion. It's not a personal crime, and the pain is temporary for most, and not all that painful compared to a personal crime. Ask a cop to investigate cyber crime and expect to get asked which murder shouldn't be investigated so your cyber crime can be.

And then there's the lack of understanding. Identity theft is a paper crime. Your identity gets stolen and you get a letter in the mail saying "There is no evidence to suggest...." or "We don't believe...[]..but here's some credit monitoring just in case." That's it...poof it's gone like vapor. Whether its apathy, lack of understanding, lack of pain and suffering, the crime is never fully understood or cared about. In reality, the company that wrote the letter has no idea, and they hope that your identity doesn't get stolen, and it's not because they actually care about you, they care about the price of their stock, their shareholders, their brand.

This lawless world of crime without punishment will soon result in what it has always resulted in...vigilante or shadow organizations and "private security" companies stepping up for hire to take the fight to the enemy. They will exploit the lack of policy and enforcement for gain.

Some time ago I met with a few FBI agents and when they said they wanted to help in any way they could I kept thinking to myself...You want to help? Put tac teams in Odessa, Kiev, Little Odessa and starting arresting or shooting. Find a way to make these ventures risky, costly and unappealing. The new breed of criminal is not nearly as secretive as those from the older mold. So exploit their egos. Poison the money sources, do something other than build a case against people you can't prosecute. Infiltrate, manipulate, lie, cheat and steal to get in to their organizations and take them down and for crying out loud..assign a cybercrime investigator to work with "informants". This isn't a fight against cybercrime, it's a fight against organized crime. treat it like a vapor crime and it will be so in the eyes of politicians, law enforcement and the populace. Treat it like organized and personal crime and people will notice.

When news articles come out about cybercrime related news they are gone in a flash and given cute names like "april fools worm". Did you know that TJX arrests happened? Significant or not, they did. To be frank, they only got low rung members and affiliates of the ring. How many major news outlets covered it? I can't think of a single one. Instead, Cybercrime gets the "on hold treatment". It's like being on hold and hearing that voice say "Don't worry, it's just cybercrime"... "your business is important to us, please stay on the line".

Survival mode; The purpose of survival mode is to "get yours" at whatever the cost. You do what it takes to get a loaf of bread, to secure your family, to protect yourself and those you care about. The now abandoned Ph.D's have a new purpose and it's money. Money and Power, Power and Money...Money=Power. Those without money and power will always be subject to those that have it, especially in transition economies with weak governments. These enterprising individuals have been swept up in to the world of organized crime and they're loving it. What's not to love? The money, the power, the women, the cars, the lifestyle? It's easy to love it when it's going well. That's right..all the hallmarks of modern organized crime exist and it's going well, very well. If they can keep the cash flowing, they can continue to pay off authorities and the businesses are clamoring all over each other for their piece of the pie and they're willing to do whatever it takes as well.

Organized crime has existed for centuries and it's just recently branched in to the digital realm. Why should anyone be surprised by this? It's a target rich environment, the risks are low, the rewards are high, and internationally there is nothing stopping you. There are whole new rackets, and re-invented rackets that are applied. Intimidation, fake lotteries, scams, protection, extortion, trafficking, controlling and influencing industries (Gas & Oil, construction)...sounds familiar doesn't it? This is nothing new, they've just adapted. Let's say that again...this is nothing new, they've just adapted. Since the dawn of crime, there's been a fight against it. That's right, this fight has been fought before but many pieces had to fall in to place for that fight to truly take place. The following components are missing from this new fight.

- Government

- Law Enforcement

- Populace

Security researchers, security companies, all are saying "Oh my god cybercrime is this terrible thing and it's huge!" We read headlines detailing hundreds of thousands of identities being stolen, of large sums of money being lifted from bank accounts, of thousands of credentials being compromised. Meanwhile the rest of the world just keeps on ticking, moving forward like nothing is happening.

One has to ask..do they care? There are no bombs, no known murders associated with cybercrime gangs(at least I don't know of any..if you do tell me). Cybercrime has been relegated to the realm of "nuisance" crime, right next to harrassment and stalking. Computers are still seen as magic, and cybercrime is seen as smoke, mirrors and illusion. It's not a personal crime, and the pain is temporary for most, and not all that painful compared to a personal crime. Ask a cop to investigate cyber crime and expect to get asked which murder shouldn't be investigated so your cyber crime can be.

And then there's the lack of understanding. Identity theft is a paper crime. Your identity gets stolen and you get a letter in the mail saying "There is no evidence to suggest...." or "We don't believe...[]..but here's some credit monitoring just in case." That's it...poof it's gone like vapor. Whether its apathy, lack of understanding, lack of pain and suffering, the crime is never fully understood or cared about. In reality, the company that wrote the letter has no idea, and they hope that your identity doesn't get stolen, and it's not because they actually care about you, they care about the price of their stock, their shareholders, their brand.

This lawless world of crime without punishment will soon result in what it has always resulted in...vigilante or shadow organizations and "private security" companies stepping up for hire to take the fight to the enemy. They will exploit the lack of policy and enforcement for gain.

Some time ago I met with a few FBI agents and when they said they wanted to help in any way they could I kept thinking to myself...You want to help? Put tac teams in Odessa, Kiev, Little Odessa and starting arresting or shooting. Find a way to make these ventures risky, costly and unappealing. The new breed of criminal is not nearly as secretive as those from the older mold. So exploit their egos. Poison the money sources, do something other than build a case against people you can't prosecute. Infiltrate, manipulate, lie, cheat and steal to get in to their organizations and take them down and for crying out loud..assign a cybercrime investigator to work with "informants". This isn't a fight against cybercrime, it's a fight against organized crime. treat it like a vapor crime and it will be so in the eyes of politicians, law enforcement and the populace. Treat it like organized and personal crime and people will notice.

When news articles come out about cybercrime related news they are gone in a flash and given cute names like "april fools worm". Did you know that TJX arrests happened? Significant or not, they did. To be frank, they only got low rung members and affiliates of the ring. How many major news outlets covered it? I can't think of a single one. Instead, Cybercrime gets the "on hold treatment". It's like being on hold and hearing that voice say "Don't worry, it's just cybercrime"... "your business is important to us, please stay on the line".

Monday, July 27, 2009

Thanks John

This afternoon John Mellon announced his retirement from the ISFCE. As a member for a few years now and as an active CCE, I take my hat off to you John. You've done an awful lot for this profession, the ISFCE and the CCE community and we all owe you a debt of gratitude for your time, countless efforts and devotion to making the industry, the ISFCE and the CCE what it is today. Enjoy your well deserved retirement.

Thursday, July 23, 2009

Lessons learned - a menagerie

While writing up a paper the other night I got inspired to share some things...some lessons learned from incidents over the past year. Here's to hoping this helps or entertains.

Communication needs to be accurate and timely

When your IRT is in the middle of a widespread incident and you need to notify the organization at large, the information must be accurate. Tech support - your boots on the ground - needs accurate information to take remediation steps at the micro level. This information must also be communicated in a timely manner. At least two communications need to go out within the first 24 hours. One to alert the organization, and the second to provide a status update.

SITREPS are valuable

When you or your IRT are dealing with an incident it is vital to provide Situation Reports or SITREPS to your client and managment. The frequency and depth of these SITREPS can be determined by the scope and severity of the incident.

A simple chart like this helps:

Tier 1 Incident - SITREP ea. 1-4 hrs.

Tier 2 Incident - SITREP ea. 8 hrs.

Tier 3 Incident - SITREP ea. 24 hours.

SITREPS should contain the following information.

Who is doing What, Where there are doing it, When it will be done.

Assessment of the situation

Updates on old news

Updates on new news

Partnerships work well in a distributed environment

When you are the incident manager and you do not have full authority over a distributed environment, you must partner with the people in charge of the distributed environment. This is the only way to be successful in a crisis situation. The incident must become everyone's problem with the seriousness being communicated effectively.

Tech support and end users are like eye witnesses

70% of what they tell you will be incomplete, misinformed or just plain wrong.

There will always be information that would have been helpful yesterday

Incidents do not always go perfectly. You will never have the full picture when you need it. Gather what information you can, assess the collected information, and make a decision. Adaptability is one of the key traits of a good incident responder.

Stop trying to prevent the last incident and focus on the next incident

Often times after a significant incident and organization will enter a tailspin trying to solve the last incident. Numerous resources will be poured into making sure 'it never happens again'. The reality of the matter is that it will happen again, just not in the same way. This is why incident follow up is important. After an incident, you do need to address the Root Cause but you need to look forward to the next incident and begin preparation. As a former coach once said "don't stand there and admire the ball after you shoot, keep moving"

In 30 years of computing the security industry has never solved a problem

Every time I go out on a call I am reminded of this nasty little truth. The security industry has never solved a problem. Imagine taking an exam with 8 non-trivial proofs. You are expected to complete them in 30 minutes. This is an almost impossible task. My money is on an incomplete exam and mistakes in the proofs you have attempted. Due to the constant evolution in the technology world, problems never get solved and history repeats itself frequently. It is because of this that Incident Responders should keep current, and pay attention to history.

Don't be afraid to say you don't know

This one is tough for a lot of people to digest. People seem to want the wrong answer instead of a non-committal one. There is nothing wrong with not knowing everything. Better to not know and find out, than to appear to know and show yourself to be wrong later.

Due Diligence is not the same as Investigation

If you are approached by a client and they engage you to perform a task to do their due diligence, it is not the same as investigating a matter to search for the truth. Those that want due diligence are simply looking to CYA. Those that truly want an investigation will be in search of root cause, impact, and conclusion.

Routine Investigations only exist in news articles

Every investigation this past year has been different. The only thing routine about an investigation is the tools and process used. Nothing takes 5 minutes, and getting to point B is never a straight line. Commit your tools and process to memory and train yourself and your team. This way when the investigation changes course you can adapt easily.

Establish working relationships with key vendors you rely on, and customers that rely on you

Incident response is a two way street. If you have a product that your organization relies on to conduct operations, ensure you have a strong working relationship with them. Meet with all vendors at least once per year, if not more. This pays off for both sides and keeps both sides informed of needs and opportunities. In a time of need, you will want that vendor on the phone assisting you with their product. Likewise, if you are serving a client, you want to have a good relationship. Visit your clients when there is not a crisis. This lowers stress and fosters trust and respect.

Don't hold on too tight and remember to breathe

When functioning at a high operational tempo for extended periods of time, you will experience burnout. As a result, efficiency and productivity decreases drastically. Know yourself well enough to know when it's time to decompress and give yourself some breathing room. If you manage a team, take your team out for drinks and laughs once in a while, send people to training, give them comp time. Do anything and everything to keep yourself and your team operating at peak performance levels.

Incident detection should not overwhelm analysis capabilities

When you are drafting budgets or you seek funding for projects that involve incident detection, you should try to remember that incidents require resources to respond to and ultimately analyze data. When detection overwhelms your ability to analyze incidents you experience backlogs and rash decision making. Remember that an analysis takes approximately 20-40 hours on average and a good analysis can not be rushed. Keep analysis requirements in mind any time you are looking to improve your detection. Great, you detected an incident, can you respond to it and analyze it?

Communication needs to be accurate and timely

When your IRT is in the middle of a widespread incident and you need to notify the organization at large, the information must be accurate. Tech support - your boots on the ground - needs accurate information to take remediation steps at the micro level. This information must also be communicated in a timely manner. At least two communications need to go out within the first 24 hours. One to alert the organization, and the second to provide a status update.

SITREPS are valuable

When you or your IRT are dealing with an incident it is vital to provide Situation Reports or SITREPS to your client and managment. The frequency and depth of these SITREPS can be determined by the scope and severity of the incident.

A simple chart like this helps:

Tier 1 Incident - SITREP ea. 1-4 hrs.

Tier 2 Incident - SITREP ea. 8 hrs.

Tier 3 Incident - SITREP ea. 24 hours.

SITREPS should contain the following information.

Who is doing What, Where there are doing it, When it will be done.

Assessment of the situation

Updates on old news

Updates on new news

Partnerships work well in a distributed environment

When you are the incident manager and you do not have full authority over a distributed environment, you must partner with the people in charge of the distributed environment. This is the only way to be successful in a crisis situation. The incident must become everyone's problem with the seriousness being communicated effectively.

Tech support and end users are like eye witnesses

70% of what they tell you will be incomplete, misinformed or just plain wrong.

There will always be information that would have been helpful yesterday

Incidents do not always go perfectly. You will never have the full picture when you need it. Gather what information you can, assess the collected information, and make a decision. Adaptability is one of the key traits of a good incident responder.

Stop trying to prevent the last incident and focus on the next incident

Often times after a significant incident and organization will enter a tailspin trying to solve the last incident. Numerous resources will be poured into making sure 'it never happens again'. The reality of the matter is that it will happen again, just not in the same way. This is why incident follow up is important. After an incident, you do need to address the Root Cause but you need to look forward to the next incident and begin preparation. As a former coach once said "don't stand there and admire the ball after you shoot, keep moving"

In 30 years of computing the security industry has never solved a problem

Every time I go out on a call I am reminded of this nasty little truth. The security industry has never solved a problem. Imagine taking an exam with 8 non-trivial proofs. You are expected to complete them in 30 minutes. This is an almost impossible task. My money is on an incomplete exam and mistakes in the proofs you have attempted. Due to the constant evolution in the technology world, problems never get solved and history repeats itself frequently. It is because of this that Incident Responders should keep current, and pay attention to history.

Don't be afraid to say you don't know

This one is tough for a lot of people to digest. People seem to want the wrong answer instead of a non-committal one. There is nothing wrong with not knowing everything. Better to not know and find out, than to appear to know and show yourself to be wrong later.

Due Diligence is not the same as Investigation

If you are approached by a client and they engage you to perform a task to do their due diligence, it is not the same as investigating a matter to search for the truth. Those that want due diligence are simply looking to CYA. Those that truly want an investigation will be in search of root cause, impact, and conclusion.

Routine Investigations only exist in news articles

Every investigation this past year has been different. The only thing routine about an investigation is the tools and process used. Nothing takes 5 minutes, and getting to point B is never a straight line. Commit your tools and process to memory and train yourself and your team. This way when the investigation changes course you can adapt easily.

Establish working relationships with key vendors you rely on, and customers that rely on you

Incident response is a two way street. If you have a product that your organization relies on to conduct operations, ensure you have a strong working relationship with them. Meet with all vendors at least once per year, if not more. This pays off for both sides and keeps both sides informed of needs and opportunities. In a time of need, you will want that vendor on the phone assisting you with their product. Likewise, if you are serving a client, you want to have a good relationship. Visit your clients when there is not a crisis. This lowers stress and fosters trust and respect.

Don't hold on too tight and remember to breathe

When functioning at a high operational tempo for extended periods of time, you will experience burnout. As a result, efficiency and productivity decreases drastically. Know yourself well enough to know when it's time to decompress and give yourself some breathing room. If you manage a team, take your team out for drinks and laughs once in a while, send people to training, give them comp time. Do anything and everything to keep yourself and your team operating at peak performance levels.

Incident detection should not overwhelm analysis capabilities

When you are drafting budgets or you seek funding for projects that involve incident detection, you should try to remember that incidents require resources to respond to and ultimately analyze data. When detection overwhelms your ability to analyze incidents you experience backlogs and rash decision making. Remember that an analysis takes approximately 20-40 hours on average and a good analysis can not be rushed. Keep analysis requirements in mind any time you are looking to improve your detection. Great, you detected an incident, can you respond to it and analyze it?

Monday, July 20, 2009

The FTK 2 dilemma

So you're using FTK 2.x...Does the separate database server buy you anything? This is the question I've been asking myself for about a week now. After having good success with FTK 2 on a standalone system I moved to a split box configuration. Per the recommendations here, I put my more powerful system in place as the Oracle Database.

In addition I threw a quad core processor with 8GB and a handful of new SATA 2 drives in to a second system. It's not a brand new system but it meets the specs for an FTK2 worker system.

As it turns out, and in my humble opinion, the documentation appears to be misguided for a two box configuration. Here are a few thoughts.

FTK 2 worker:

If doing a two box configuration, here are my worker recommendations:

CPU - Quad core or Dual Quad Core CPU. The 9400+ series for core 2 quad, or if you've got the money for a new system, go with the i7. If you've really got some cash..go quad core xeon.

RAM - At least 2GB memory for each CPU Core; 4GB/Core if you can afford it. Trust me, don't skimp on the RAM.

DISK - This is broken down in to categories.

Adaptec 5805(internal) or the 5085(external) seems to be the best controller out there for the price.

And here are my oracle server recommendations:

CPU - A single quad core CPU.

RAM - 2GB/core.

DISK -

And if you combine the two systems in to one here are my recommendations:

CPU - Dual Quad Core Xeon

RAM - 4GB/Core

DISK - Face it, you don't have enough space internally, even the cosmos can be tight on space (best case ever). Get an external disk array. Addonics has some very interesting cage configuration options here. Others have done the homework to spec out their own arrays, saving $$. You'll want Multilane E-sata or SAS drives. As with any I/O intensive operation..you need spindles to spread the load.

And don't forget the backups. Backup servers/devices don't need to be high powered, they need to be reliable. Get a raid 5 NAS or a bunch of disks in older hardware.

Now that hardware is out of the way let's look at the real dilemma.

Does a separate database server provide any utility when you have two computers? My response is no, you don't. An average case these days will be fully processed(indexed, hashed, KFF, duplicates etc) in about 24 hours. I haven't seen any benefit in moving to two systems..it still takes 24 hours or more. There are major drawbacks to a two box configuration as well.

Addendum Pictures:

The Worker while processing a case

And the database server at the same time

And the database server at the same time

In addition I threw a quad core processor with 8GB and a handful of new SATA 2 drives in to a second system. It's not a brand new system but it meets the specs for an FTK2 worker system.

As it turns out, and in my humble opinion, the documentation appears to be misguided for a two box configuration. Here are a few thoughts.

FTK 2 worker:

- The worker is truly the worker. Splitting the configuration puts the majority of the load on the worker machine. The database system simply shuffles records across the network and handles queries.

- The worker requires a lot of resources - especially while processing a case. While processing a case, the CPU/memory/disk combination kept the worker box pegged, meanwhile the database server was sleeping. Pictures coming soon.

- The worker system not only does the heavy lifting, it also needs to manage the GUI. Try processing evidence and moving around the GUI..you'll see what I mean.

- The database server is mostly idle until you load it up with data and need to 'work' the case. Even then, it doesn't require a lot of resources. It needs to fulfill queries and this isn't a transaction level oracle server. It does a lot of reading at one time and a lot of writing at one time.

- The database server only needs to meet the specs of the worker machine. It does not need to be more powerful than it as the worker machine is doing the heavy lifting.

- The database server requires disk and memory. CPU is nice to have but it doesn't need to be dual quad cores when you only have one worker.

If doing a two box configuration, here are my worker recommendations:

CPU - Quad core or Dual Quad Core CPU. The 9400+ series for core 2 quad, or if you've got the money for a new system, go with the i7. If you've really got some cash..go quad core xeon.

RAM - At least 2GB memory for each CPU Core; 4GB/Core if you can afford it. Trust me, don't skimp on the RAM.

DISK - This is broken down in to categories.

- OS: A raid 1 works nicely here.

- Index drives: At least a 4 drive Raid-0. This is where your indexes will be stored. These drives need to be the fastest available. 300GB WD velociraptors should do the trick. However, remember your storage requirements. Expect indexing to use 1/5th of the total evidence set. e.g; 1TB evidence = approx. 250GB indexing space.

- Image drives: When you load a case you want to put your images on a locally attached storage media. I'd go with at least a 2 drive Raid-0.

Adaptec 5805(internal) or the 5085(external) seems to be the best controller out there for the price.

And here are my oracle server recommendations:

CPU - A single quad core CPU.

RAM - 2GB/core.

DISK -

- OS: Use a Raid 1 here.

- This is a database server. The question you need to ask yourself is: Do I want redundancy? If yes, go with at least a 4 drive raid 10, if not more. If no, go with a 4+ drive raid 0. Remember your space requirements. Expect to use 10% of the size of the evidence for database storage in each case, in addition to the minimum 6GB.

And if you combine the two systems in to one here are my recommendations:

CPU - Dual Quad Core Xeon

RAM - 4GB/Core

DISK - Face it, you don't have enough space internally, even the cosmos can be tight on space (best case ever). Get an external disk array. Addonics has some very interesting cage configuration options here. Others have done the homework to spec out their own arrays, saving $$. You'll want Multilane E-sata or SAS drives. As with any I/O intensive operation..you need spindles to spread the load.

- OS: Raid 1 still works here.

- Indexing

- Database

- Images

And don't forget the backups. Backup servers/devices don't need to be high powered, they need to be reliable. Get a raid 5 NAS or a bunch of disks in older hardware.

Now that hardware is out of the way let's look at the real dilemma.

Does a separate database server provide any utility when you have two computers? My response is no, you don't. An average case these days will be fully processed(indexed, hashed, KFF, duplicates etc) in about 24 hours. I haven't seen any benefit in moving to two systems..it still takes 24 hours or more. There are major drawbacks to a two box configuration as well.

- FTK 2 has been so heavily over-engineered that all you need is network and agent complexity. The worker loses connectivity even on a dedicated link. How does it recover from this? Does it recover every thing completely?

- Backups on a two box system requires it's own whitepaper. If a GUI product requires a separate paper for backing something up when the single system backup is straightforward, there are too many variables.

- You now have to maintain two operating systems, two sets of hardware, twice the expense and two times as many failure modes.

Addendum Pictures:

The Worker while processing a case

And the database server at the same time

And the database server at the same time

Thursday, July 16, 2009

Drive encryption

Target drive encryption is not a standard practice...the question is..should it be?

First some assumptions.

1) You're an intrusion examiner. You are investigating PII data theft and the computer you happen to be imaging for the case contains 200,000 SSN's. You're imaging the data that's handled by the custodian and the PII of 200,000 individuals nationwide. This now legally makes you the custodian of the data. Your image isn't encrypted..there's only 1 tool I know of that encrypts the images. If the target drive gets stolen, say goodnight to your livelihood. Errors and Omissions insurance won't cover the cost of notification and credit monitoring and lawsuits.

Should you encrypt the drive?

2) You're a forensic examiner. You are investigating a IP theft case. You image a drive from a laptop. The data on the drive is considered to be worth millions to the company. You are now in possession of this very important data that belongs to someone else.

Should you encrypt the drive?

Asset theft is a pretty common occurrence and they tend to be opportunistic. backup tapes, hard drives, laptops, usb keys, blackberries...all have been stolen/lost.

As forensic examiners we are the custodians for a lot of other people's stuff. We compile images of a lot of private information and store them in an unencrypted format. The questions in my mind are does chain of custody trump the need for full disk or image encryption? Should target drives/images being encrypted as an industry standard?

What do you think?

First some assumptions.

- The source drive is not encrypted

1) You're an intrusion examiner. You are investigating PII data theft and the computer you happen to be imaging for the case contains 200,000 SSN's. You're imaging the data that's handled by the custodian and the PII of 200,000 individuals nationwide. This now legally makes you the custodian of the data. Your image isn't encrypted..there's only 1 tool I know of that encrypts the images. If the target drive gets stolen, say goodnight to your livelihood. Errors and Omissions insurance won't cover the cost of notification and credit monitoring and lawsuits.

Should you encrypt the drive?

2) You're a forensic examiner. You are investigating a IP theft case. You image a drive from a laptop. The data on the drive is considered to be worth millions to the company. You are now in possession of this very important data that belongs to someone else.

Should you encrypt the drive?

Asset theft is a pretty common occurrence and they tend to be opportunistic. backup tapes, hard drives, laptops, usb keys, blackberries...all have been stolen/lost.

As forensic examiners we are the custodians for a lot of other people's stuff. We compile images of a lot of private information and store them in an unencrypted format. The questions in my mind are does chain of custody trump the need for full disk or image encryption? Should target drives/images being encrypted as an industry standard?

What do you think?

Monday, July 13, 2009

If an identity gets stolen on the internet

Does anyone notice?

How about when 1000 identities get stolen? What about 2000? What about 50,000?

While doing a "routine investigation" of a Qakbot infection I discovered a dropzone for the malware. I say 'a' and not 'the' because the DZ was a configuration option and it could be updated at any time. The DZ I found was full of thousands of keylog files and other data uploaded from infected systems. This information was promptly sent to some contacts in the FBI.

What I begin to wonder is...did any one of these people actually know their identity was at risk or in fact actually stolen? How many of the companies whose users were infected actually knew about the real risk this malware infection posed to their organization? How many FTP servers were abused as a result of this infection, how many webservers were compromised? How many sensitive intranet systems were exposed?

Qakbot, like many current threats, was short lived, with longer lasting effects. It was a sortie if you will, a quick blitz to get out, infect thousands of systems, capture as much information as possible and send it back to the people behind it to sell, or use in other attacks.

This type of blitz happens daily. For every Conficker worm there are thousands of malware samples that do just as much damage as the media friendly worms using Guerilla style tactics, and it is these smaller samples that eat away at individuals and organizations. Certainly there are large breaches that cause massive damages in one fell swoop, but it is more common to see smaller infections get ignored, and they therefore create more of a problem in the long term. It is unfortunate when organizations don't take these small infections seriously. How many malware infections made the media in the past year that weren't conficker or other major media frenzy type worm and caused serious damage?

Like this one(or 8000), or this one, or this one...hopefully you get the point.

Many organizations don't discover these simple compromises for weeks or months and when they do, it's likely because their antivirus product updated definitions (which are largely ignored) or a third party identified the compromise. Do a quick evaluation of your customers and your own internal organization and look at the malware infections that have taken place over the past 6 months. How many affected individuals reset their passwords? Did any of the systems get "cleaned" and not get rebuilt? How many of those individuals had access to company web or ftp servers? How many of those people are in your Business Service Centers or administrative offices? How many of those people work with sensitive data on a daily basis? How many take their laptops home and let little johnny play on it?

OR

Suppose the following:

John Q Public works for your organization..let's call it Booze Brothers inc. John works in the HR department. John is on vacation and logs in to a public kiosk in the business office at the hotel that happens to be infected with Qakbot to do the following five things:

Based on some statistics it is likely that John Q Public just exposed your organization because he uses the same password for one of the five sites above as he does at your organization. The lines between personal identity and work identity are blurred because of this password synchronization that is a common practice. His information gets sent to the DZ and later it is culled and re-used, sold or traded. It may be 24 hours, it may be a week but you'll likely see John Q's account used in an attack against your organization - maybe phishing, maybe used to upload javascript to a webserver, or maybe just a brute force attack.

Identities fall each day to the malware infections that plague us, though recall I don't believe in simple malware infections due to the gateway malware theory. As always, if you haven't done so in the past year, it's probably time to revisit your IR plan to address this sort of stuff.

How about when 1000 identities get stolen? What about 2000? What about 50,000?

While doing a "routine investigation" of a Qakbot infection I discovered a dropzone for the malware. I say 'a' and not 'the' because the DZ was a configuration option and it could be updated at any time. The DZ I found was full of thousands of keylog files and other data uploaded from infected systems. This information was promptly sent to some contacts in the FBI.

What I begin to wonder is...did any one of these people actually know their identity was at risk or in fact actually stolen? How many of the companies whose users were infected actually knew about the real risk this malware infection posed to their organization? How many FTP servers were abused as a result of this infection, how many webservers were compromised? How many sensitive intranet systems were exposed?

Qakbot, like many current threats, was short lived, with longer lasting effects. It was a sortie if you will, a quick blitz to get out, infect thousands of systems, capture as much information as possible and send it back to the people behind it to sell, or use in other attacks.

This type of blitz happens daily. For every Conficker worm there are thousands of malware samples that do just as much damage as the media friendly worms using Guerilla style tactics, and it is these smaller samples that eat away at individuals and organizations. Certainly there are large breaches that cause massive damages in one fell swoop, but it is more common to see smaller infections get ignored, and they therefore create more of a problem in the long term. It is unfortunate when organizations don't take these small infections seriously. How many malware infections made the media in the past year that weren't conficker or other major media frenzy type worm and caused serious damage?

Like this one(or 8000), or this one, or this one...hopefully you get the point.

Many organizations don't discover these simple compromises for weeks or months and when they do, it's likely because their antivirus product updated definitions (which are largely ignored) or a third party identified the compromise. Do a quick evaluation of your customers and your own internal organization and look at the malware infections that have taken place over the past 6 months. How many affected individuals reset their passwords? Did any of the systems get "cleaned" and not get rebuilt? How many of those individuals had access to company web or ftp servers? How many of those people are in your Business Service Centers or administrative offices? How many of those people work with sensitive data on a daily basis? How many take their laptops home and let little johnny play on it?

OR

Suppose the following:

John Q Public works for your organization..let's call it Booze Brothers inc. John works in the HR department. John is on vacation and logs in to a public kiosk in the business office at the hotel that happens to be infected with Qakbot to do the following five things:

- Visit amazon to see when that special gift for his daughter will arrive at home

- Check his bank account because he was waiting on a reimbursement

- Check personal email account to see how things are going at home

- Log in to twitter to tell everyone how he's doing

- Log in to facebook to update his page

Based on some statistics it is likely that John Q Public just exposed your organization because he uses the same password for one of the five sites above as he does at your organization. The lines between personal identity and work identity are blurred because of this password synchronization that is a common practice. His information gets sent to the DZ and later it is culled and re-used, sold or traded. It may be 24 hours, it may be a week but you'll likely see John Q's account used in an attack against your organization - maybe phishing, maybe used to upload javascript to a webserver, or maybe just a brute force attack.

Identities fall each day to the malware infections that plague us, though recall I don't believe in simple malware infections due to the gateway malware theory. As always, if you haven't done so in the past year, it's probably time to revisit your IR plan to address this sort of stuff.

Saturday, July 11, 2009

Real world APT

In a break from the traditional topics I tend to discuss here I wanted to spend a little time on APT since there's been a surge in discussion around it. There's been some buzz over APT or Advanced Persistant Threat in the past few days. Like Richard noted very few people know what it is or have experience with it. Not only that, those that have been exposed to it don't talk about it for various reasons. Here's my experience with it in a very simplified post.

Their behaviors:

Like any attacker, they make mistakes. I won't share those here considering the public nature of a blog, but suffice it to say that the trail is evident.

Most people are intent on finding the bad guys and removing the threat from their organization. This is great and all..but this is also where counter-intelligence plays a role. Passive monitoring can pay off if you don't rush to shut them down. They do not make half-assed attempts at compromising assets and they make good use of their time on a compromised asset. Rapid detection, analysis and decision making must follow suit.

Digital Forensics and Incident Response techniques play an important role in monitoring their activities.

How can you combat them? I use what I've been calling the holy trinity of Digital Forensics.

In the words of others, these guys are "top shelf". They are professional reconnaissance teams, they slip in under the radar, they do not waste time, and they have one goal in mind; To collect information. There are ways to identify them, and watch them but you must move as quickly and you and your organization need to be as committed as they are.

Their behaviors:

- They tend to work from 9-5, suggesting they are professionals and this is their job

- They are methodical in their work and it is not random

- They target Defense manufacturers, military and government personnel

- They make use of compromised SSL certificates

- They make use of compromised credentials to gain access to military and government email and documents

- They compromise systems in traditional manners but they fly in under the radar, are precise in the compromises

- They use customized tools

- They leverage tools available on the compromised systems

Like any attacker, they make mistakes. I won't share those here considering the public nature of a blog, but suffice it to say that the trail is evident.

Most people are intent on finding the bad guys and removing the threat from their organization. This is great and all..but this is also where counter-intelligence plays a role. Passive monitoring can pay off if you don't rush to shut them down. They do not make half-assed attempts at compromising assets and they make good use of their time on a compromised asset. Rapid detection, analysis and decision making must follow suit.

Digital Forensics and Incident Response techniques play an important role in monitoring their activities.

How can you combat them? I use what I've been calling the holy trinity of Digital Forensics.

- Memory dumps

- Disk images

- Emergency NSM

In the words of others, these guys are "top shelf". They are professional reconnaissance teams, they slip in under the radar, they do not waste time, and they have one goal in mind; To collect information. There are ways to identify them, and watch them but you must move as quickly and you and your organization need to be as committed as they are.

Thursday, July 2, 2009

Unsung tools - Raptor Forensics

Every so often you come across tools that get very little press. One such tool in my humble opinion is Raptor Forensics bootable CD from the fine folks at Forward Discovery. In short, this cd needs to be in your toolkit if it isn't already.

One of the most popular questions I see is "How do I acquire a macbook air?". While I'll try to address that question specifically, I want to widen the scope because it applies to any mac system that need to be imaged.

When dealing with a macbook air your options are somewhat limited.

Well obviously if the box is on you can use F-response to acquire it rather quickly. You can only do this however if you have the proper credentials.

What if you're going in clandestinely? What if the system is handed to you and it's off? This is where Raptor Forensics bootable CD comes in.

Burn the iso

Attach a powered USB hub to the macbook air.

Attach a USB target drive formatted however you see fit(though you can do this within Raptor).

Attach a USB cd drive.

Insert the cd.

Boot the mac while holding down 'c'.

The environment will boot.

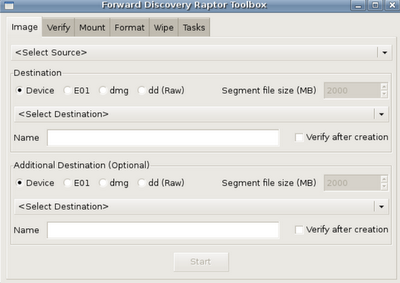

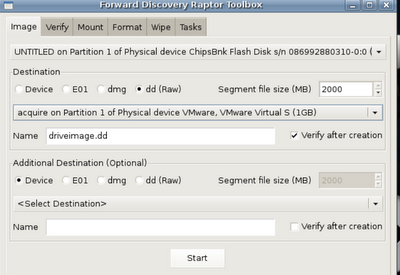

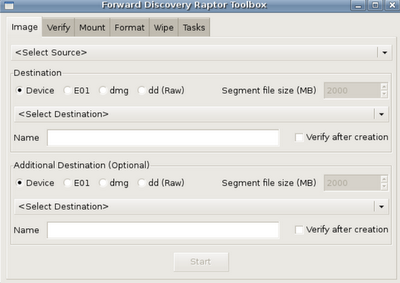

After the system boots click on the Raptor Toolbox. When it opens you'll see the following.

This is where my biggest problem with tool originates. The workflow from left to right is all out of whack. In order to acquire an image, you need to mount the target drive. In order to mount the target drive it needs to be formatted. In order to be formatted it should be wiped. Now, you've probably already done this but in my opinion, and in terms of workflow in this toolkit it should be changed.

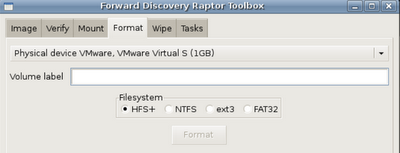

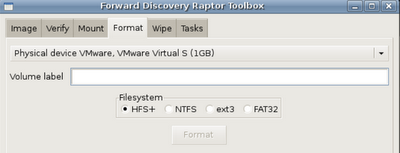

That said, let's format and mount a target drive. First, click the 'format' tab.

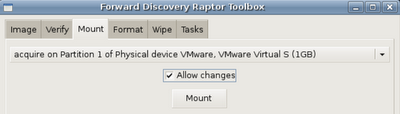

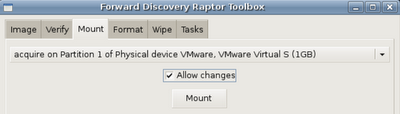

Next, Click the 'mount' tab and select your target device. You'll want it to be read/write.

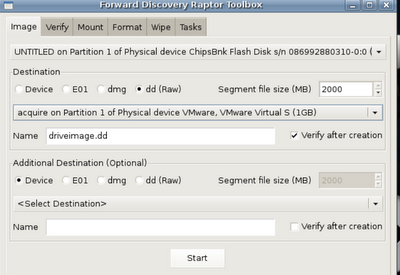

Great! Now that it's formatted and mounted let's acquire something!

In this case I'm imaging a USB key, but it works just fine for the macbook air and other macs. Since everything is point and click it's a pretty straight forward process. Just select the source, target, name and make sure you select 'verify' and then Start.

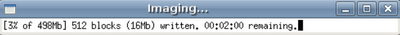

An imaging window will appear as well as a verification window (which looks the same) when the time comes.

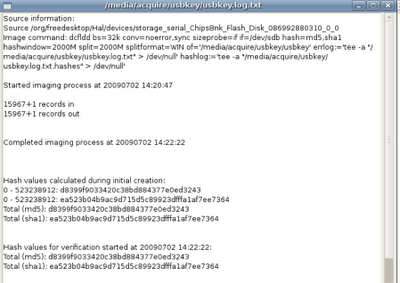

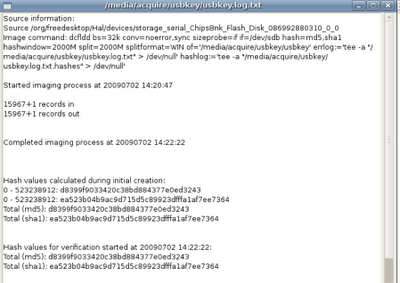

Once acquisition and verification complete you'll see a nice log window appear that shows the acquisition command line and hashes.

Once acquisition and verification complete you'll see a nice log window appear that shows the acquisition command line and hashes.

And it's just that simple. Hopefully this helps those in need. Raptor Forensics is a great utility to include in your kit and there are 239 reasons it's better than helix for this purpose.

One of the most popular questions I see is "How do I acquire a macbook air?". While I'll try to address that question specifically, I want to widen the scope because it applies to any mac system that need to be imaged.

When dealing with a macbook air your options are somewhat limited.

- There's no firewire

- There's no network card (unless you use the usb port)

Well obviously if the box is on you can use F-response to acquire it rather quickly. You can only do this however if you have the proper credentials.

What if you're going in clandestinely? What if the system is handed to you and it's off? This is where Raptor Forensics bootable CD comes in.

Burn the iso

Attach a powered USB hub to the macbook air.

Attach a USB target drive formatted however you see fit(though you can do this within Raptor).

Attach a USB cd drive.

Insert the cd.

Boot the mac while holding down 'c'.

The environment will boot.

After the system boots click on the Raptor Toolbox. When it opens you'll see the following.

This is where my biggest problem with tool originates. The workflow from left to right is all out of whack. In order to acquire an image, you need to mount the target drive. In order to mount the target drive it needs to be formatted. In order to be formatted it should be wiped. Now, you've probably already done this but in my opinion, and in terms of workflow in this toolkit it should be changed.

That said, let's format and mount a target drive. First, click the 'format' tab.

Next, Click the 'mount' tab and select your target device. You'll want it to be read/write.

Great! Now that it's formatted and mounted let's acquire something!

In this case I'm imaging a USB key, but it works just fine for the macbook air and other macs. Since everything is point and click it's a pretty straight forward process. Just select the source, target, name and make sure you select 'verify' and then Start.

An imaging window will appear as well as a verification window (which looks the same) when the time comes.

Once acquisition and verification complete you'll see a nice log window appear that shows the acquisition command line and hashes.

Once acquisition and verification complete you'll see a nice log window appear that shows the acquisition command line and hashes.

And it's just that simple. Hopefully this helps those in need. Raptor Forensics is a great utility to include in your kit and there are 239 reasons it's better than helix for this purpose.

Saturday, June 20, 2009

What do you seek?

If you work in this field long enough you will come across a situation where you need to justify your methodology. You will be asked to show why you need to look at all of the data points you look at. It's par for the course. When I get asked to do this I respond simply by asking the following question in return.

Do you seek an answer or do you seek the truth?

This question tends to make the doubter pause. When you are staring a potentially damaging case in the face, do you seek an answer or do you seek the truth? More importantly do the decision makers seek an answer or the truth?

There is a school of thought out there that says if any file containing sensitive data is accessed after the system is compromised, then analysis should stop right there, a line should be drawn and anything accessed post compromise date should be notified upon. I talked about it back in December when discussing footprints in the snow. Think on that for a moment. If a system in your organization is compromised and you run an antivirus scan and trample on Access times, it means you're done, you're notifying, and you're going to have a lot to answer for when your customers get a hold of you. You will not have given the case its due diligence.

In just a second you'll see a graph that I generated. It shows file system activity based on a mactime summary file. Take a few moments to analyze the graph. *I did have to truncate the data set. There were hundreds of thousands of files touched on 5/12*

Does it tell you anything? Imagine the system were compromised on 5/5/09. There are a few things that should stand out almost immediately; Such as the dramatic increase in file system activity beginning on 5/11 and continuing through 5/12. Or how about more simply that there is a story to be told here.

Do you seek an answer or the truth?

A person in search of an answer is going to get a response of "ZOMG the attacker stole a lot of data and you're notifying on every single file contained on the system that contains PII data". If you seek an answer you are not interested in the story that needs to be told, you are not interested in any of the details of the case. You want simply to put the matter to rest, get it behind you and move on to the next case that will be decided by the uninformed.

A truth seeker will ask what happened on 5/11 and 5/12. A truth seeker will interview key individuals, a truth seeker will evaluate the log files present on the system and many other data points to determine what the cause was. A truth seeker will want to hear the story based on your expert opinion, which you reached by examining all sources of data.

A truth seeker will take interest upon hearing that the system administrator not only scanned the hard drive for malware, but he copied hundreds of thousands of files from the drive. A truth seeker will want to see the keystroke log files. A truth seeker will thank you for decrypting the configuration file and output used by the attacker to determine intent and risk. A truth seeker will ask you to look at network logs and a variety of other sources of data to reach a conclusion and render an opinion.

So, the next time someone questions your methodology ask them if they want an answer or the truth. If all they want is an answer, more power to them, ignorance is bliss after all but there is always a story to be told.

Do you seek an answer or do you seek the truth?

This question tends to make the doubter pause. When you are staring a potentially damaging case in the face, do you seek an answer or do you seek the truth? More importantly do the decision makers seek an answer or the truth?

There is a school of thought out there that says if any file containing sensitive data is accessed after the system is compromised, then analysis should stop right there, a line should be drawn and anything accessed post compromise date should be notified upon. I talked about it back in December when discussing footprints in the snow. Think on that for a moment. If a system in your organization is compromised and you run an antivirus scan and trample on Access times, it means you're done, you're notifying, and you're going to have a lot to answer for when your customers get a hold of you. You will not have given the case its due diligence.